How to Avoid Being Fooled by Randomness When A/B Testing

I was recently asked to answer a similar question on Quora, and I thought this was as great question that warranted a blog post.

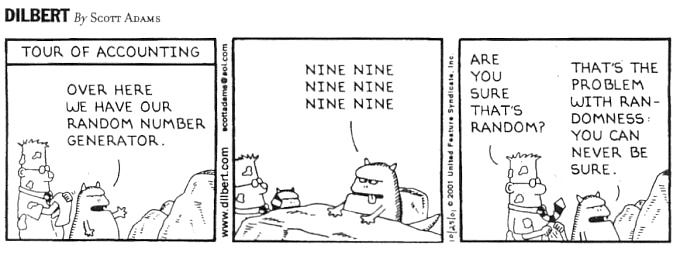

DILBERT © 2001 Scott Adams. Used By permission of UNIVERSAL UCLICK. All rights reserved.

It’s not at all rare for A/B testers to sometimes see results that show a certain change to their app (or website, email, etc.) to be statistically significant when in fact the change actually had no impact on their goal or KPI. The reason this happens is because “statistically significant” means that there is a low probability (usually less than 5% chance) that there is no connection between a change in the results and the change you made to your app (or website, email, etc.). But this doesn’t mean that there’s no chance. At the typical 95% significance level, there is a good chance that 1 out of 20 tests will have a “statistically significant” results randomly. Here is a previous article we wrote that explains this a little more.

Without getting too much into the math theory, false positives due to randomness are much more common than people think. So how do you do your best to avoid wrong answers?

1) Have a concrete hypothesis before you even start experimenting

Not only that, but also know what a good result will look like. Do not just run an A/B test, measure everything, and look for anything that is statistically significant. That’s a good way to get false positives.

In fact, several A/B testing solutions out there actually tout the fact that they track all your goals, metrics, and KPIs and show you which ones present a statistically significant result without your having to set them up for each test. Sounds great, right? Actually, no. Measuring everything is a great way to get false positives. I mean, if you measure 20 events, with p<=0.05, there’s a good chance you’ll get one metric that’s randomly significant. So if you measure every event, goal, whatever in your app (or website, email, etc.), there are bound to random metrics that show a statistically significant change by chance.

The key here is to make sure you know what the right result should look like before you even start.

2) Track the entire funnel

Say you’re testing ideas to increase conversion on the “Add to Cart” button. Make sure you’re not only tracking conversions on that one button, but every conversion point in the checkout flow. All the results together should tell a story. If the results make sense, you can more reliably think that it’s not random.

Tracking the funnel is also really important to understanding your actual KPIs rather than just looking at the first step. For example, say you have a login page that gives customers the option to register, log in, or skip. You want to test if more users will log in if you remove the skip button. Unsurprisingly, removing the skip means more people click the registration and login buttons while overall lowering the number of people who actually enter the app. However, that doesn’t tell the whole story. Tracking further down the funnel (i.e. tracking the “add to cart” button and purchase completions) can tell you a different story. It’s possible that even though the overall number of people who enter the app are lower, those who get through are actually more encouraged to buy and your purchase completion rate actually increases.

John Egan, a growth expert at Pinterest, wrote a great article about how to better leverage conversion funnels here.

3) Iterate

Once you have one result, create the next logical experiment. Randomness won’t stand up to the test of iteration. There’s a fair chance any single experiment will be significant, but if you also test all the different ways to approach that hypothesis, there’s a much lower chance that many tests are giving you the same random result. For example, maybe you think that telling people about the high level of security built into your checkout will reassure and therefore convert more users (like KAYAK did). You can run one test where you test that having copy saying something like “Don’t worry, your info is safe with us” versus not having the copy at all. If the copy works better, you can move on to test different copy with the security message. If all your tests are theoretically consistent with each other, you can more safely say it’s not random.

4) A/A test to build experiment intuition

A/A tests are a great way to 1) test your A/B testing to really see how often random results occur and 2) build intuition around how to read and interpret results. Here‘s an article about how Khan Academy does it.

At the end of the day, you can’t guarantee that any given test is 100% accurate. You really have to weigh the cost of knowing with higher confidence against the risk of being wrong (read more here: When NOT to A/B Test Your Mobile App). With some tests, the risk is low. Even if you don’t get a statistically significant result, you might have reasons to choose variant A or B anyway (this happened to Airbnb). As the testing expert, it’s up to you to decide what the risks and upsides are.

Thanks for

reading!

More articles you might be interested in:

When to Use Multivariate Testing vs. AB Testing

Deciding between sequential and multivariate testing without knowing their advantages and limitations can pose significant challenges. If you’re looking to optimize your app’s conversion rate, sequential A/B tests, or experiments with two variants, usually do the trick. But in some...

Read More7 Things to A/B Test in Your Mobile App

We hear from customers that planning out your second, third, and fourth A/B tests is one of the hardest things to. Many app managers have a first test in mind when they start experimenting and planning out a series of...

Read MoreGet Started with Mobile A/B Testing using ONE Easy Test

I talk to a lot of app developers and product managers on a regular basis about A/B testing their mobile app. The feedback I hear the most is: “I know I need to start A/B testing, but we just haven’t...

Read More