When to Use Multivariate Testing vs. AB Testing

Deciding between sequential and multivariate testing without knowing their advantages and limitations can pose significant challenges. If you’re looking to optimize your app’s conversion rate, sequential A/B tests, or experiments with two variants, usually do the trick. But in some cases, such as launching a new homepage, multivariate tests can offer more impactful insights.

So how, when, and why to choose between multivariate testing vs A/B testing strategies? We’ll explore how these two strategies differ and when to choose multivariate tests.

A/B Testing

Before we get into multivariate testing, let’s take a quick look at A/B testing.

A/B testing is a recognized form of experimentation that enables businesses to understand their users and how to optimize their experiences. This powerful tool helps both digital and mobile teams validate their assumptions and make data-driven decisions about their releases — from testing which UI elements leads to higher engagement to how a modified checkout flow affects orders.

From startups to large tech firms, companies of all sizes and industries rely on A/B testing to make smarter choices. Even the simplest of tests can help steer big decisions. When Google couldn’t decide which of two blue colors they preferred for a certain design element, they reportedly used A/B testing to assess the performance of 41 different shades of blue instead.

Most of us don’t have the luxury nor the population size to run dozens of tests over a button color. What’s important is the ensure the A/B tests that you do run are statistically significant and give you meaningful results that you can iterate on. Every time a user engages on mobile is an opportunity to learn about how you can optimize it using A/B testing.

There are essentially two ways of doing A/B testing: sequential and multivariate. Below, we’ll explain the difference between them, as well as how you know which approach is right for your experimentation goal.

Sequential A/B Testing

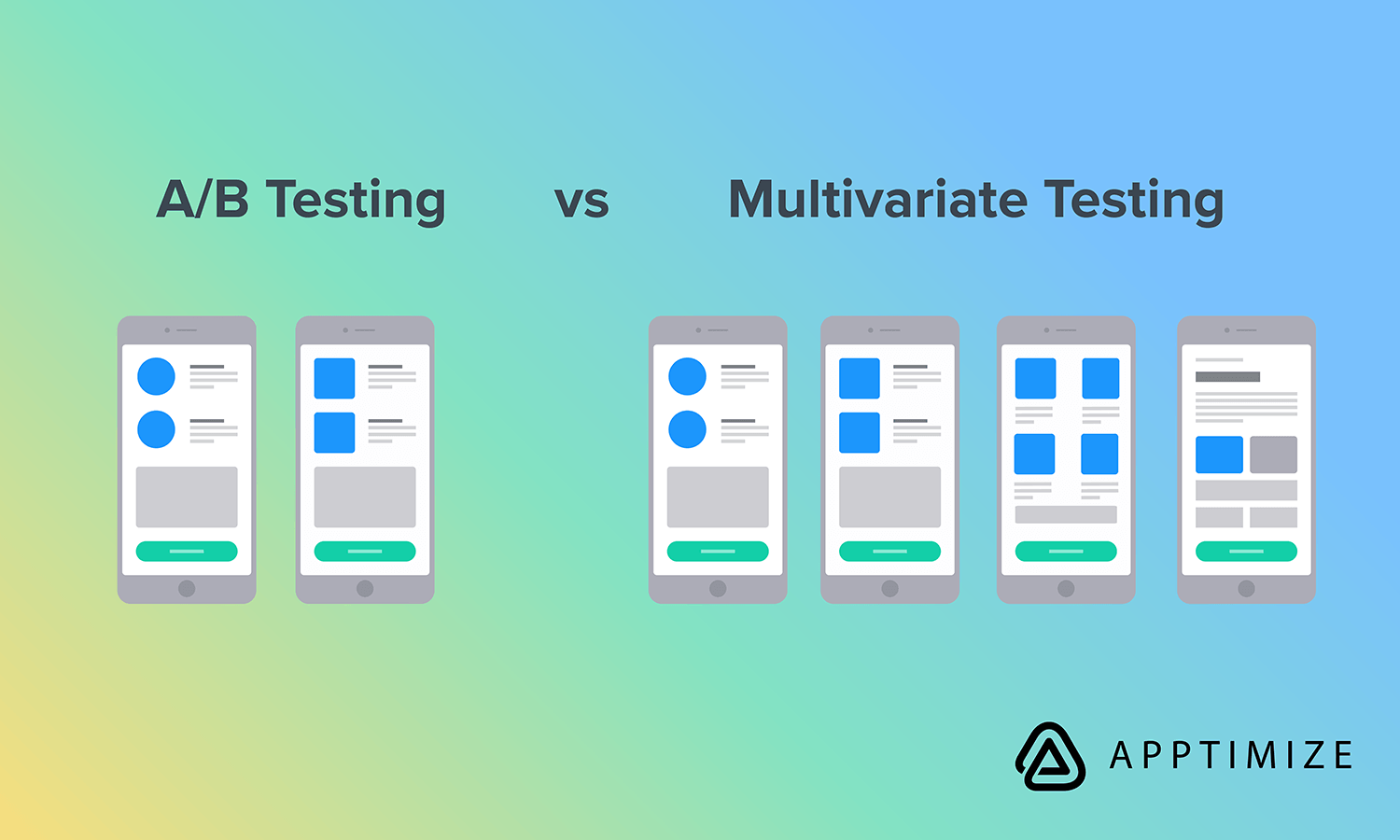

Sequential A/B tests compare two versions of a product. The changes that you test can be of any kind or size. Sequential testing often involves major changes, because you have a finite number of visitor impressions or mobile sessions and you want to get the most value from these experiments.

Also known as split testing, sequential A/B testing is a good route when it comes to making design decisions or in the end of the design process. The aim of split testing is to compare two different versions of the design to see which one performs better. Each user sees just one design (A or B), even if they update the interface. This way, the same number of people will view each version and you can measure which version achieved the lift that you would consider meaningful.

For example, Netflix used sequential A/B testing to assess the performance of its registration page in five head-to-head experiments against different variants. Each of these variants gave users more information by letting them browse the Netflix catalog. However, the tests showed that the original page with no catalog information still led to the most signups.

This finding is particularly fascinating because it flew in the face of Netflix’s expectations. Even though letting users browse the Netflix catalog helped to demonstrate the service’s value, it was more important to make barriers to entry as low as possible.

Like Netflix discovered, experimentation is crucial in order to test your hypotheses and avoid falling prey to bad assumptions. Using sequential A/B testing will likely give you a clear, definitive answer as to which option is most preferable.

Multivariate A/B Testing

Like sequential testing, multivariate testing is a way to test changes to a website or app. However, it usually involves more subtle changes to a greater number of variables. Multivariate testing looks at different combinations of at least two variables, so you can measure more design elements in all possible variations.

Multivariate tests requires a bit more statistical knowledge from the research team, but is a valuable tool when you are in the early stage of experimenting with many different ideas to try.

For example, you might test a few different ideas for each of several design elements on your UI: The header, the call-to-action text, and the information that users provide when registering. This complexity adds up quickly. Even with three different ideas for three elements, you’re already looking at 3 * 3 * 3 = 27 different possibilities to test.

The end goal of multivariate testing is not only to find the optimal combination of variants, but also to discover which elements of the product have the greatest impact — positive or negative — on KPIs. As such, multivariate A/B testing is a higher-risk, higher-reward strategy. Because you’re testing so many different combinations, multivariate testing gives you less feedback on any single combination of variants, but a greater chance of hitting the jackpot combination of variables that result in a lift in your KPIs.

Although data collection takes more time to be statistically significant, once you have the results, it’s easier to implement multiple changes simultaneously based on the results.

How to Choose the Right A/B Testing Strategy

Ultimately, whether you choose a sequential or multivariate testing strategy, or a combination of both, should depend on several factors. These include your product’s maturity, the user resources that you have available, and your tolerance for risk.

Sequential testing is a reliable method for finding local maxima. By only examining and modifying one variable at a time, you’re highly likely to find the best version of a specific variable.

However, by itself a sequential strategy won’t help you find the global maximum for your product. For example, a split test of menu icons might show that menu icons with illustrations result in a higher click-throughs than those with photos. That may be true if the rest of app remains constant in a test, but photo icons may beat out illustrations if other variables were to change.

Because of this difference, you’ll often see a “generational divide” between which methods companies prefer. For products that have already found their market fit, sequential A/B testing will help you tweak and fine-tune a given element. If you’re still on the road to product maturity, multivariate testing can help you move faster toward a solution that works for you.

However, sequential and multivariate testing should both have a place in any well-developed experimentation program. Both methods can complement each other and fill in gaps in your knowledge. Finding which works better to help you understand your users’ behavior involves a bit of experimentation.

Apptimize has a team of mobile strategists who can help you develop a roadmap for experimentation. To learn more about how top tech companies are using A/B testing to improve and grow their business, download our free guide The Definitive Guide to A/B Testing Digital Products.

Thanks for

reading!

More articles you might be interested in:

Get Started with Mobile A/B Testing using ONE Easy Test

I talk to a lot of app developers and product managers on a regular basis about A/B testing their mobile app. The feedback I hear the most is: “I know I need to start A/B testing, but we just haven’t...

Read MoreHow Segmentation Can Correct Misleading A/B Testing Results

When looking at A/B testing results, just seeing that one variant is statistically significantly better than another sometimes does not tell the whole story. As experimenters and business decision makers, what we really want to know is why. Did users...

Read More5 Easy Ways to Improve Your App with A/B Testing

Here are 5 ideas for relatively easy to implement tests to visual aspects of your app. You most likely won’t need to rework user flows or do a lot of complex coding. These are layout and design changes that can...

Read More