Phased Rollouts and Holdouts¶

Apptimize fully supports phased rollouts and holdout experiments to accommodate both product operations and experimentation needs. A phased rollout happens when you change the way end users are allocated to the experiment as it runs. A holdout experiment is when a small control group of users is held out of new product experience(s) to measure long term effects. The next few sections offer more information on the feature:

Use cases for phased rollouts and holdout experiments

Setup instructions to run these experiments

Analyzing phased rollouts and avoid statistical biases

Required SDK update to get started on the feature

Use Cases¶

Assessing long-term effects: Consumer behavior is often seasonal. A standard two to four-week experiment proves short term effects of a product decision but may not represent long-term customer behavior. Long-term effects can be assessed without leaving too many users out of a new product release. You can setup a holdout experiment that allocates small percentage of end users to the original experience while most of the end users see the proven new product experience. See use case example from Pinterest. Apptimize allows you to transition from a short term experiment to a long-term holdout experiment by simply updating the allocation percentage. The smooth transition takes away the headache of sample pollution and other statistical biases.

Assessing effect of multiple product decisions: Another use case for holdout experiments is that the held-out group of users can serve as the control for multiple future product decisions. For instance, the original holdout group can be used to assess the effects of multiple iterations of your new recommendation algorithm. Compared to adding up the effects of multiple experiments, holdout experiments are easier for estimating effects of multiple product changes. You can read more about the complexity of aggregating experiment effects here

Pausing allocation once ideal sample size is reached: Apptimize allows you to pause allocation to an experiment or specific variants. Pausing means to stop allocating more end users while keeping current participants in the experiment. Pausing is great for two main reasons. One is that by not allocating more users, pausing saves more users for other experiments. The second reason is that pausing allows you to make sure all participants in the experiment have had enough time to convert. This is helpful because not all conversion is expected to happen immediately after a user is exposed to a new experience.

Flexible ramp-up for operational needs: Occasionally there are operational reasons to slow down new experience rollouts. Unexpected increasing support volumes or backend infrastructure pressures are among the many examples. Apptimize allows you to flexibly change allocation without interrupting the live experiment.

Setup Instructions¶

- The phased roll-out capabilities do not require special setup to use. Once SDK versions 3.0

or higher are installed, simply use the Targeting step to edit your experiment to create new phases in your roll-out

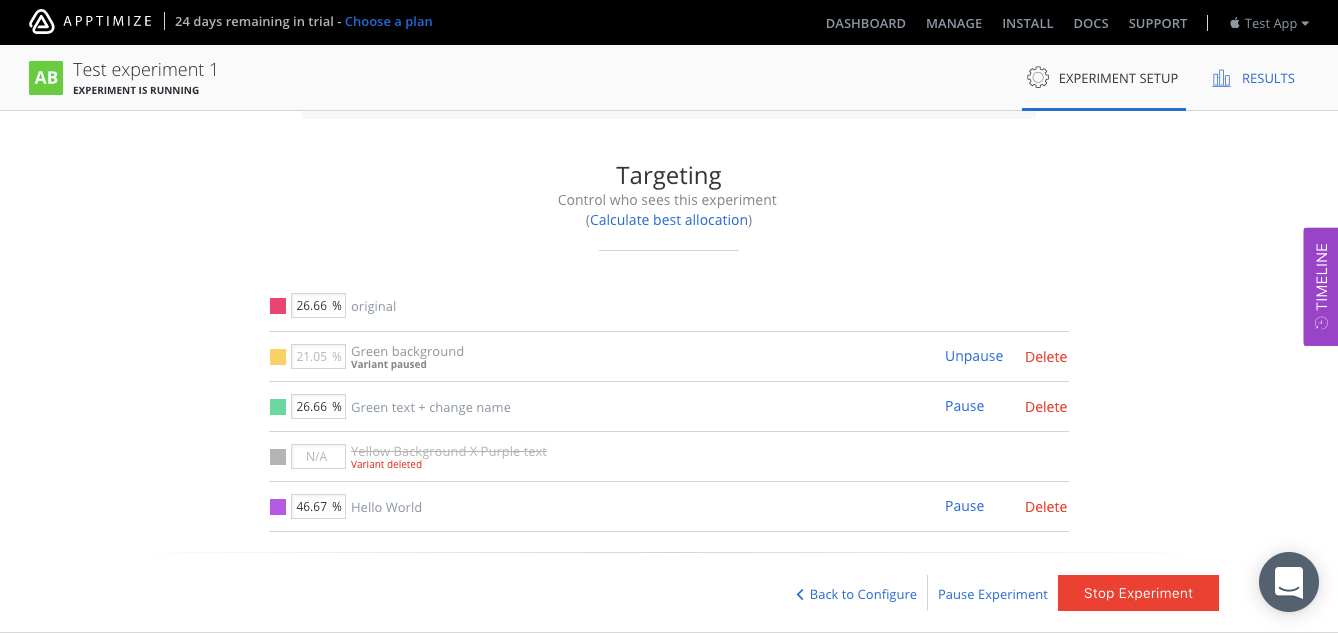

Pause / Unpause: You can pause the allocation of users into a specific variant or the entire experiment. To pause, click on the Pause option, review your changes and launch. There is also an option to Pause Experiment at the bottom of the Targeting and Launch page. Pause experiment means pausing allocation to all variants in the experiment.

Delete: To use the variant-level safety switch, click on Delete beside the variant you’d like to remove, then review changes and launch. Deleting a variant is irreversible, please make sure you and your team have communicated properly on the decision.

New phase with new allocations: To change how end users are allocated in the experiment simply update the allocation percentage, review changes and launch. These updates will only change how future allocation is done, but current participants will remain in the variant they’ve been assigned to. If the proportion of allocation among variants is changed, a new phase will be generated automatically to avoid statistical biases. See the next section for more details on statistical biases.

Analyzing Phased Rollouts¶

Benefits of Analyzing Results in Phases¶

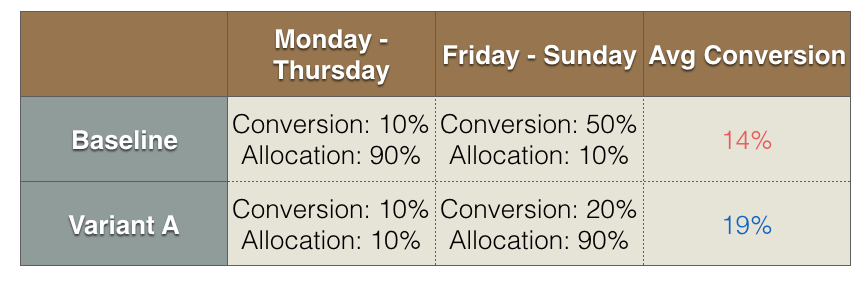

Statistical implications: Updating experiment allocation while the experiment is running can cause subtle but fairly serious statistical biases. The bias is produced due to unfairly weighing samples in a seasonal product. For example, let’s say we have a product whose weekend users are naturally more active and engaged than weekday users. Set up a simple experiment with one Baseline and one Variant for this product. The experiment started on Monday with Baseline receiving 90% allocation while variant receiving 10%. From Monday to Thursday, both Baseline and Variant produces similar conversion. Then on Friday, we update the experiment to allocate 90% to Variant, and 10% to Baseline instead. Due to the nature of the weekend seasonality, both Baseline and Variant sees much higher conversion on the weekend. Baseline’s conversion on the weekend is much better than that of the Variant. However, because we updated the experiment allocation so that Variant unfairly received more weekend users, when we aggregate the results from weekday and weekend, Variant seems to be better than baseline. This result is completely opposite to the correct conclusion we would have made if the allocation wasn’t updated or if we analyzed the results separately before and after the allocation update. This statistical phenomenon is called Simpson’s Paradox(See article for details). Here’s another resource that explains Simpson’s Paradox well.

Simpson’s Paradox is difficult to correct for since in normal cases, seasonality is fairly difficult to predict. In order to avoid the issue, Apptimize automatically segments the results so you can analyze the “weekday” and “weekend” data separately. We still suggest that you run each phase (allocation update phase) of the experiment long enough to capture enough users to represent your user population in order to evaluate the full effect of the experiment.

Analyzing Results from Experiments with Multiple Phases¶

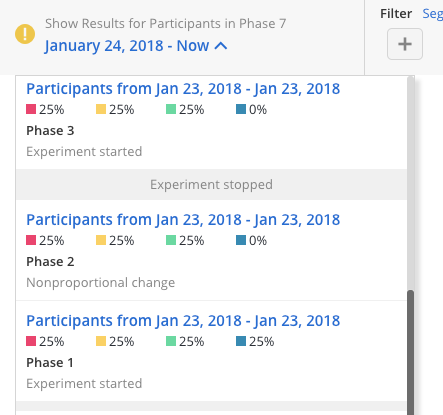

Apptimize automatically creates a new results phase when you update the proportion of allocation in an experiment. For instance, if you changed the experiment from a 50/50 split into a 70/30 split, a new phase will be created. If you paused allocation to one or more variants while still keeping the rest of the experiment running, a new phase will be created.

When an experiment is stopped and then restarted, Apptimize treats it as if it is an entirely new experiment. This means users are allocated randomly to this experiment no matter what variant they were allocated to before the experiment stopped. It is not reasonable to analyze the experiment’s results across stops & restarts just as we would never average the results of two different experiments. Hence, a new results phase would be created when an experiment is restarted. If you are testing experiment setup on production, you can use the results phases as a way to ignore invalid data from the testing phases. There is no need to “clear” the data from the testing phases of the experiment.

The results data presented in each phase should be analyzed independently as if each phase is an independent experiment from other phases. If there are major differences in the results among different experiment phases, please reassess if each phase has had enough participants and/or consider re-testing with more precise targeting.

See example experiment phases filter below:

Best Practices on Using Experiment Phases¶

Plan your phases in advance: we recommend that you treat each experiment phase or allocation update as if you are planning a new experiment. Determine the expected amount of time a phase needs to get enough users allocated to each variant. The Experiment Runtime Calculator applies here too

For scaling feature roll-out: when scaling feature roll-out as well as testing the effects of the new feature, we recommend that you scale-up the allocation proportionally as much as possible. For instance, let’s say you are fairly certain about releasing a feature and want to eventually hold out 10% of your users from the feature to assess long term effects. You can start with allocating 1% your user to baseline and 9% to the new feature at start. Once the new feature proves to be stable, you can proportionally scale up the 1%/9% split to 10%/90% eventually. Frequently changing the proportion of allocation spilts the experiment into more phases. This reduces the power of the experiment and increases the required run time of the experiment.

For the advanced use cases of assessing multiple product decisions over long periods of time, we recommend that you have at least one global holdout group for each major new feature. This can be set up as a long running experiment that devotes a small allocation to the baseline and the rest to a new feature. When running subsequent experiments on the feature, make sure your dynamic variables are set up properly so the holdout group will not be opted into the feature. The key here is to continuously allocate small percentage of users to the holdout while running more experiments on the rest of your user base. Here’s an interesting study the Airbnb team did which compared aggregating results of multiple experiments vs. using a long-term holdout group to assess multiple product decisions.

Required SDK Version¶

The phased rollout feature is compatible with iOS SDK version 3.0.0+ and Android SDK version 3.0.0+. Please update your SDK and make sure to only target app versions with updated SDK in order to do phased rollouts. We recommend setting app version targeting to filter out apps with older versions of the SDK in order to utilize the new feature properly.